Semester and Thesis Projects

The ETH AI Center offers a wide range of semester and thesis projects for students at ETH Zurich, as well as other universities. Please see the list below for projects that are currently available.

How do you publish a thesis or semester project with the ETH AI Center?

- Academia: If you are affiliated with the ETH AI Center as faculty member, post- or doctoral fellow, please add the following affiliation to your Sirop account. If you tag your thesis project with this affiliation, it should appear in the list below.

- 'ETH Competence Center - ETH AI Center (ETHZ)'

- Industry: If you represent a company that has a corporate partnership with the ETH AI Center, please contact .

Need help?

- We provide a external page template for thesis projects accouncements that you can use for your upcoming project & upload to external page SiROP.

- Is your thesis project still missing from the list below? We are constantly adding new thesis projects that are available within the ETH AI Center.

- Are you a student? Check out our Semester and Thesis projects below!

Towards Adaptive Auditory Neural-Network Based Prosthesis

This project focuses on developing targeted sound extraction algorithms for audio prosthesis using audio or audio and vision input.. You will design and implement signal processing techniques and machine learning models tailored for hearing aids or embedded audio devices. The work involves optimizing neural architectures, exploring trade-offs between computation cost and accuracy, and validating the system using real-world audio and visual data or data collected from augmented reality glasses.. Qualifications: Experience with Python, signal processing, and training neural networks, preferably also experience with embedded audio algorithms, VR/AR Application process: Write to us about your interests, CV and transcript, and we can arrange a meeting. We can supervise students from UZH and ETH. We offer semester projects as well as bachelor's and master's thesis projects.

Keywords

audio signal processing, deep neural networks, efficient networks, audio prosthesis, VR/AR, hearing aids

Labels

Semester Project , Bachelor Thesis , Master Thesis , Other specific labels , ETH Zurich (ETHZ)

PLEASE LOG IN TO SEE DESCRIPTION

More information

Open this project... call_made

Published since: 2026-02-03 , Earliest start: 2025-11-17

Applications limited to ETH Zurich , University of Zurich

Organization ETH Competence Center - ETH AI Center

Hosts Liu Shih-Chii

Topics Information, Computing and Communication Sciences , Engineering and Technology

Crowd Simulation for RL Robot Navigation

This project focuses on improving RL-based social navigation by creating a simulation framework with diverse and realistic human behaviors. Current RL methods often train on simplified crowds where all pedestrians behave similarly, which limits generalization in real-world environments.

Keywords

RL, Robot Navigation

Labels

Master Thesis

Description

Goal

Contact Details

More information

Open this project... call_made

Published since: 2026-01-26 , Earliest start: 2026-01-26 , Latest end: 2026-09-01

Applications limited to ETH Zurich

Organization Spinal Cord Injury & Artificial Intelligence Lab

Hosts Alyassi Rashid , Alyassi Rashid , Alyassi Rashid

Topics Engineering and Technology

Learning-based Control and Motion Analysis of Biomimetic Tendon-driven Fish Robot

The student will use a provided simulation and reinforcement learning pipeline to identify and model a real robotic fish, train a control policy, deploy it on hardware, and compare its motion to biological swimming under real-world constraints.

Keywords

robotics, simulation, sim-to-real, underwater

Labels

Semester Project , Bachelor Thesis , Master Thesis

PLEASE LOG IN TO SEE DESCRIPTION

More information

Open this project... call_made

Published since: 2026-01-24 , Earliest start: 2026-02-16 , Latest end: 2026-11-01

Organization ETH Competence Center - ETH AI Center

Hosts Michelis Mike

Topics Information, Computing and Communication Sciences

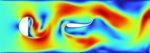

Benchmarking Neural Surrogate Models for Computational Fluid Dynamics

Comparing various neural network surrogate models on computational fluid dynamics problems.

Keywords

surrogate modeling, deep learning, fluid simulation

Labels

Semester Project , Internship , Bachelor Thesis

PLEASE LOG IN TO SEE DESCRIPTION

More information

Open this project... call_made

Published since: 2025-12-30 , Earliest start: 2026-01-01 , Latest end: 2026-12-31

Organization ETH Competence Center - ETH AI Center

Hosts Michelis Mike

Topics Information, Computing and Communication Sciences , Engineering and Technology , Physics

Iterative Optimization for 3D Computational Soft Swimmer Design

Extending an iterative surrogate model optimization framework to include soft bodies with fluid-structure interaction. Design optimization will then be shown in 2D and 3D on passive soft swimmers.

Keywords

surrogate modeling, deep learning, fluid simulation, optimization

Labels

Semester Project , Bachelor Thesis , Master Thesis

PLEASE LOG IN TO SEE DESCRIPTION

More information

Open this project... call_made

Published since: 2025-12-30 , Earliest start: 2026-01-01 , Latest end: 2026-12-31

Organization ETH Competence Center - ETH AI Center

Hosts Katzschmann Robert, Prof. Dr. , Michelis Mike

Topics Mathematical Sciences , Information, Computing and Communication Sciences , Engineering and Technology

Brain Machine Interface - Visual Neuroprosthetics

Join the Sensors Group at the Institute of Neuroinformatics (INI) to develop next-generation visual neuroprosthetics and advance the future of brain-machine interfaces! Topics Include: - develop neural networks that learn optimal stimulation patterns - utilize recurrent/spiking neural networks for creating stimulation patterns - implementing real-time computation on embedded platforms (FPGA, uC, jetson) - investigating closed-loop control strategies for electrical brain-stimulation Application process: Write us about your interests, include CV and transcript, and we can arrange a meeting. We can supervise students from UZH and ETH. We offer semester projects as well as bachelor's and master's theses projects.

Keywords

brain machine interface, visual neuroprosthetics, bmi, neural networks, Real-time computation, Embedded platforms, FPGA, Closed-loop control, neural recording analysis, Control systems, Deep learning, Verilog, Vivado, hls4ml, Hardware acceleration, Jetson, VR, Android, Unity/Blender

Labels

Semester Project , Master Thesis , ETH Zurich (ETHZ)

PLEASE LOG IN TO SEE DESCRIPTION

More information

Open this project... call_made

Published since: 2025-11-20 , Earliest start: 2025-02-01 , Latest end: 2026-12-31

Applications limited to ETH Zurich , University of Zurich

Organization ETH Competence Center - ETH AI Center

Hosts Moure Pehuen , Liu Shih-Chii , Hahn Niklas

Topics Information, Computing and Communication Sciences , Engineering and Technology

Toward Human-Like Perception with Multisensory AR Glasses

How can we give machines a perceptual experience closer to that of humans — fast, continuous, and adaptive? At the Sensors Group at the Institute of Neuroinformatics, we explore bio-inspired sensing and computation to bring human-like perception to real-world devices. In this project, you will work with state-of-the-art multimodal sensing devices, including AR glasses (RGB cameras, IMU, audio) and bio-inspired event cameras. Together, these sensors offer a unique opportunity to study how multiple sensory modalities can be fused to perceive and interpret the world in real time. Your research will advance real-time sensor fusion, embodied perception, and neural-inspired computation, forming the foundation for future research in AR scene understanding and augmented human-environment interaction.

Keywords

Multimodal Perception • Sensor Fusion • Augmented Reality • Event-Based Vision • Real-Time Systems

Labels

Semester Project , Bachelor Thesis , Master Thesis

PLEASE LOG IN TO SEE DESCRIPTION

More information

Open this project... call_made

Published since: 2025-10-17 , Earliest start: 2025-10-20 , Latest end: 2026-02-28

Applications limited to ETH Zurich , University of Zurich

Organization ETH Competence Center - ETH AI Center

Hosts Liu Shih-Chii , Li Zixiao

Topics Information, Computing and Communication Sciences , Engineering and Technology